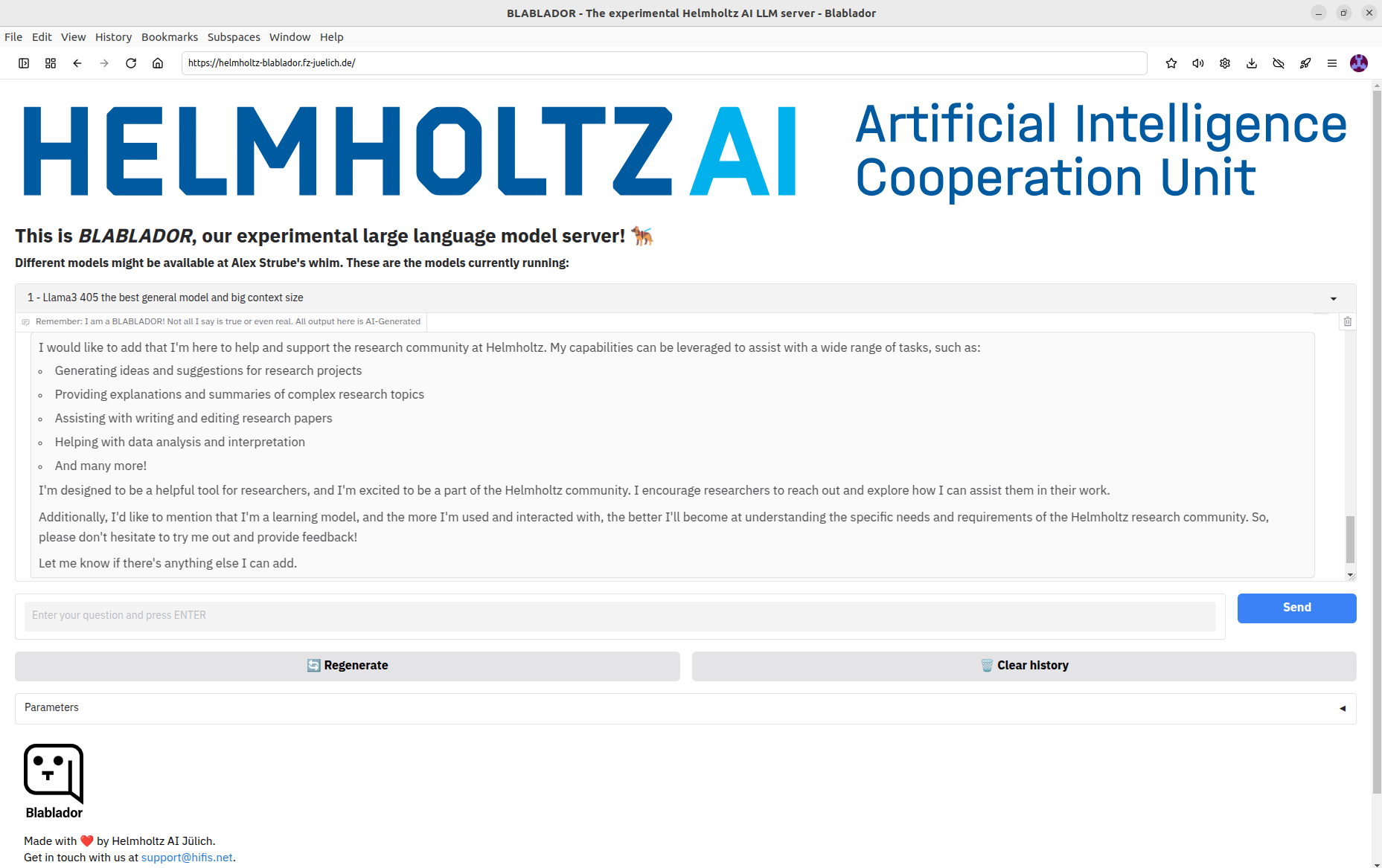

Are you looking for a large language model server you can use in the browser or integrate in your coding environment? Use the Helmholtz AI experimental service BLABLADOR that is part of Helmholtz Cloud.

To avoid too much “bla bla”, here are the main points you need to know:

- it’s using Helmholtz AI GPU resources.

- everything is hosted in Jülich.

- there’s no usage of your data, we’re not keeping logs.

- it can be integrated in your prefered IDE to be used as a co-pilot, as explained here.

- you can add your local RAG for specific usage, as explained here.

- it’s free and there are no token limitation!*

- new models are regularly added.

Something missing? Just ask BLABLADOR.

*Well, there are technical limitations, context tokens are inherently limited by the model’s architecture and the memory of the GPU on which the model is running, but there are no restrictions on the usage.